Image Aesthetics Assessment with AI

TL;DR: Learn and discuss the image aesthetics assessment problem and artificial intelligence based solutions for it. Link to the Vietnamese version of this blog post can be found here.

Table of Contents

- Introduction

- Why is Image Aesthetics Assessment Important?

- How do We Assess Image Aesthetics?

- Image Aesthetics Assessment with AI

- Discussion

- References

Should have background knowledge

Introduction

Aesthetics [1,2,3] (noun) is the formal study of art, especially in relation to the idea of beauty.

Image aesthetics assessment (IAA) [3,4], when being referred as a problem solved by the machines, is a computer vision problem that aims at categorizing images into different levels of aesthetic; i.e., is making decisions about image aesthetics similar to those of humans.

Related terms

- Image quality assessment

- Image memorability

- Image cropping

Why is Image Aesthetics Assessment Important?

Have you ever questioned yourself that have I ever tried to judge the aesthetic of a photo? I bet most of you reading this blog are already doing this, even daily. It doesn’t have to be something fancy, the act of assessing image aesthetics occurs during the process of taking this photo. You can adjust the shooting angle, adjust the light, change the aperture of the lens, or ask the model to change her pose,… all to get the best picture of your desire.

Besides spiritual value, beautiful photos could bring great material value as well. That is why we have experts in judging photos’ quality. That is why we have image-based social networks like Instagram and Pinterest. That is why we have stock photography market; e.g., Pixtastock in Japan, and the big guy Shutterstock, just to name a few.

Another reason, in my opinion, that makes this problem important is that the need of finding beautiful photos on the Internet will increase day by day. As the number of shared photos grows larger and larger, it can become more difficult to find an eye-catching photo. Perhaps, in the near future, sorting photos according to aesthetic scale will become the standard feature of all search engines.

How do We Assess Image Aesthetics?

Now we will see how humans and machines do the job of assessing the aesthetic of photos.

Humans

As a human, surely each person will have his or her own assessment of image aesthetics; however, to evaluate systematically, we will need to define aesthetic criteria.

For example:

- Composition

- Lighting

- Object emphasis

- The beauty of model

- The beauty of pattern

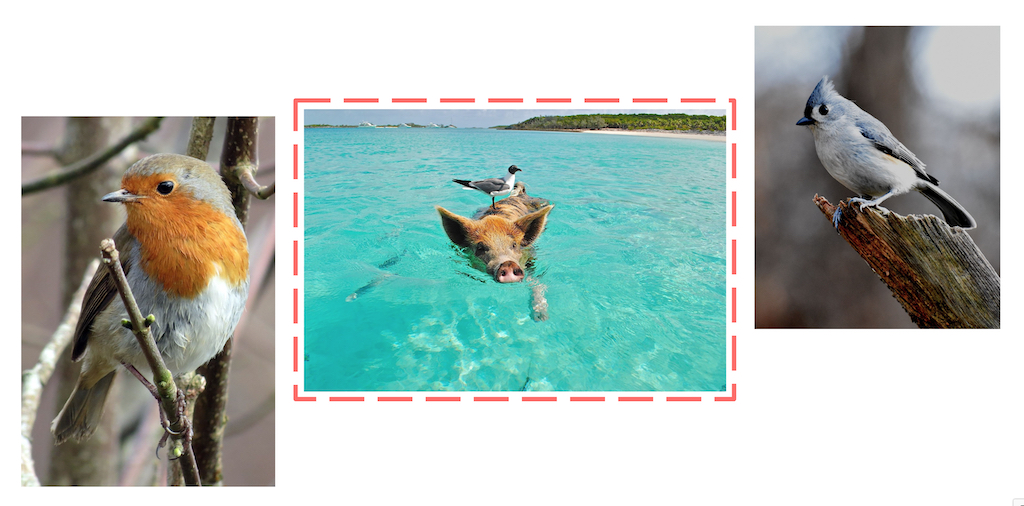

- Uniqueness of content

- …

In terms of composition criteria, there are many ways to layout an image, the most popular is probably rule of thirds, then possibly symmetry,…, and many others.

Satisfying certain compositional criteria can make a photo looks better. This short video — instagram.com/reel/CaPxY2pqOH0/ shared by mitchleeuwe — can help you better understand this aesthetic criterion.

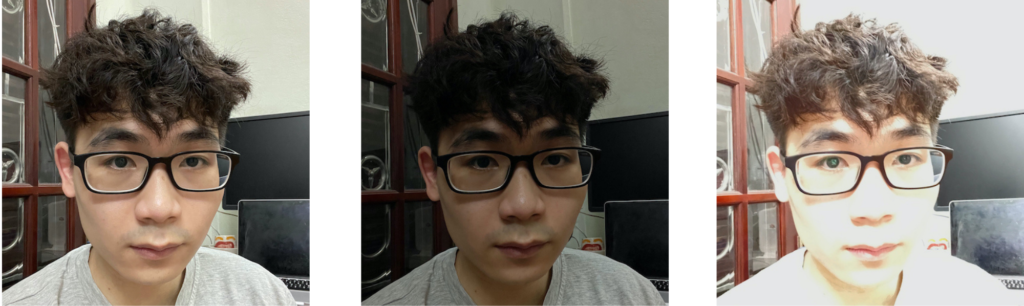

Regarding the lighting factor, a photo with good lighting can be defined as one with sufficient light, without underexposure or overexposure.

Emphasizing the main subject can also make a photo more beautiful. By manipulating elements such as contrast, isolation, convergence, etc., artists can direct the viewer’s eyes to certain locations on the image to serve their artistic intent.

The assessment of the attractiveness of models and textures is more subjective, mostly coming from the personal point of view of the reviewer.

Depending on one’s experience, gender, nationality, religion, etc., each person’s assessment of image aesthetics can be different.

The uniqueness of the image content even needs the judgment of experts, to choose the photo with the most unique content.

And there are many other criteria that we can define to assess the aesthetics of photos. It can be seen that this is not an easy task for humans, there are many factors that can be taken into consideration. So, can machines do this job for us?

Machines

Before answering the question of whether a machine can assess the aesthetics of images, we will classify the aesthetic criteria listed above into two groups:

- Technical-related criteria

- Composition

- Lighting

- Object emphasis

- Content-related criteria

- The beauty of model

- The beauty of pattern

- Uniqueness of content

The word “technical” has shown the mechanicalness of the criteria belonging to the first group. When humans can evaluate a criterion quantitatively, the decisions made will be more objective and will be more painless for us to teach the machine to know how well an aesthetic criterion is satisfied for each input image.

In contrast, the evaluation criteria related to the content of the image contain many subjective opinions. Finding the rule, therefore, becomes more difficult, more difficult to evaluate mechanically.

In the next section, we will see how researchers address this issue with machines. For now, we know that in order to fully assess the aesthetics of an image, we will need to consider both objective and subjective factors. This poses challenges for humans in transferring this knowledge to the machine in order to simulate the process of assessing image aesthetics.

Image Aesthetics Assessment with AI

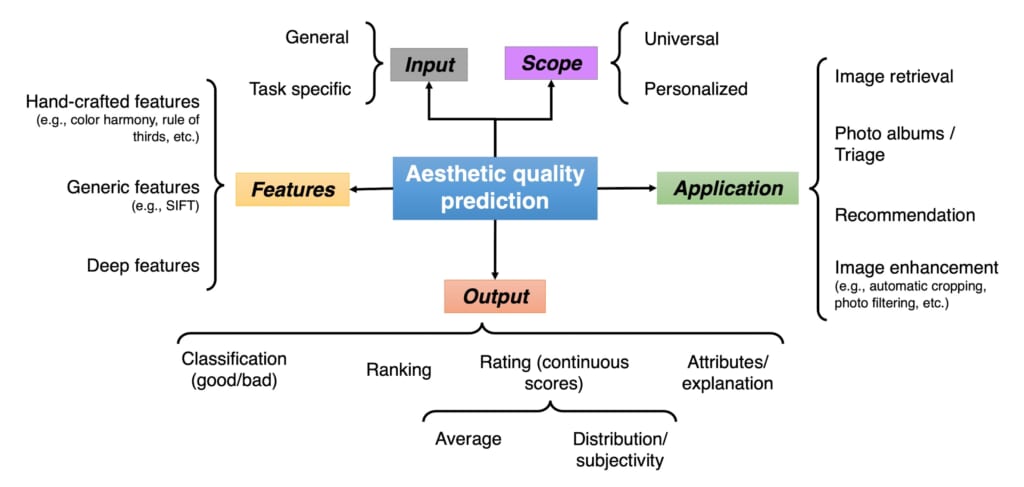

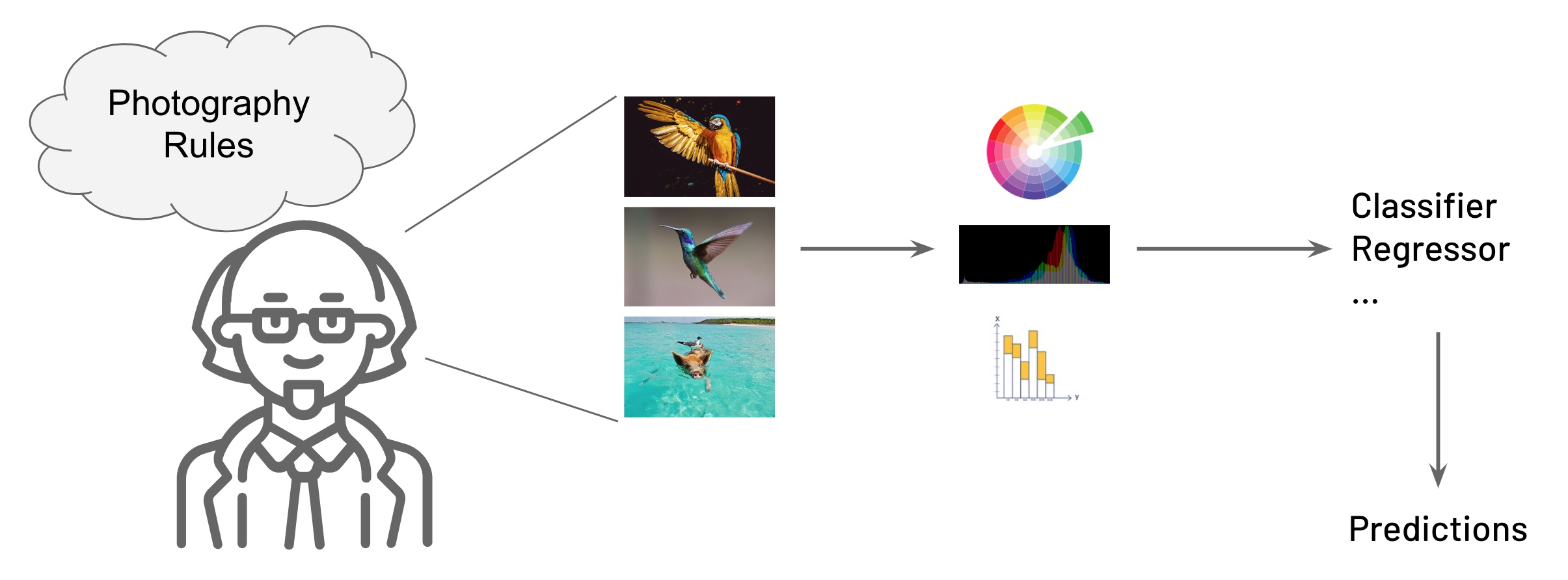

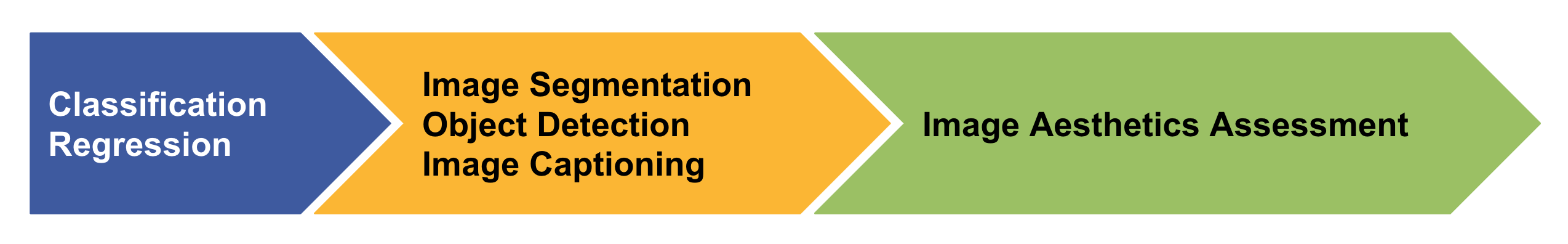

The figure above gives us the big picture of the IAA. Five aspects that make up this picture include:

- input,

- scope,

- features,

- output,

- and application.

In this blog post, we will focus on only three of them: Feature, output, and scope.

Features

First of all, talking about the features used for solving the problem, if we consider the solution used to solve the IAA problem as a mill, then we must provide materials to let this mill do its job, and the materials here will be the features. In the studies so far on the problem of assessing image aesthetics, the materials could be…

Hand-crafted features

With the comprehensive knowledge of photography, physics, mathematics, and possibly many other fields, the experts suggest the features they think might represent the images. For instance, by analyzing the color histogram of photos, perhaps we can find the rules to distinguish an image with harmonious colors from other ones.

Works that use hand-crafted features are often quite old, and the results obtained by the solutions proposed by these works are not really good. However, it is easy to identify the factors that lead to the decisions regarding image aesthetics when using these features.

Generic features

These are features extracted from images that are not intended to solve a specific problem; e.g., Bag of Visual Words [5], Fisher Vectors [6], etc. By achieving better results than the old approaches, the solutions use general features has demonstrated that the aesthetic features of the image are implicitly embeded in the general features. This brings us closer to the era of features learned directly from the data — features learned by deep learning models, or deep features for short.

Deep features

Features learned directly from data by deep learning models do not require prior knowledge of IAA. The deep learning model will automatically learn the rules from the input data, images in this case, and the corresponding label, or we can say the answer, prepared by the human. The advantage of this method is that it gives much better results than using hand-crafted features. However, one must use a huge amount of data for this method to work, and it will be almost impossible to explain the decisions that a deep learning model makes when assessing the aesthetics of an image.

A few popular deep learning models that are frequently used in recent works about IAA include: AlexNet [7], VGG [8], MobileNet [9,10,11], ResNet [12].

Output

Once we provide the mill with materials, we definitely want it to output something for us. The output types of the IAA problem can be divided into four groups, corresponding to four problems: Classification, regression, ranking, and explanation.

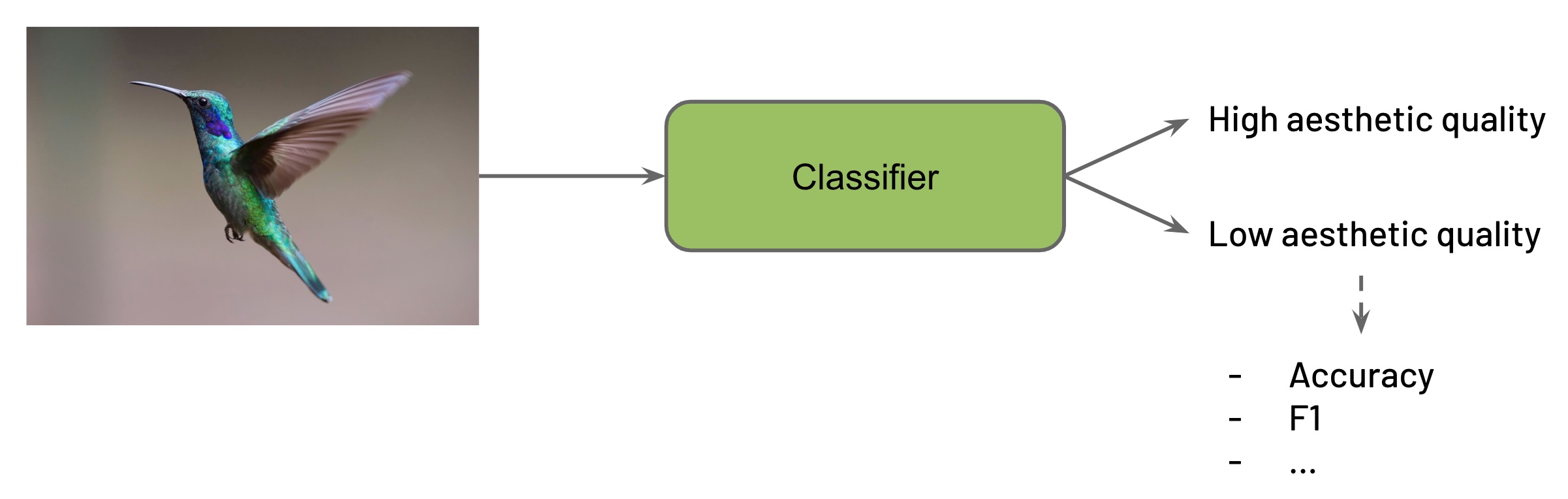

Classification problem

For the classification problem, we can expect the solution to classify an image into different aesthetic levels, low/high aesthetic quality (binary classification), or aesthetic points within a given range (multi-class classification). The commonly used metrics to evaluate this problem are those you might already be familiar with, such as accuracy, F1 score, etc.

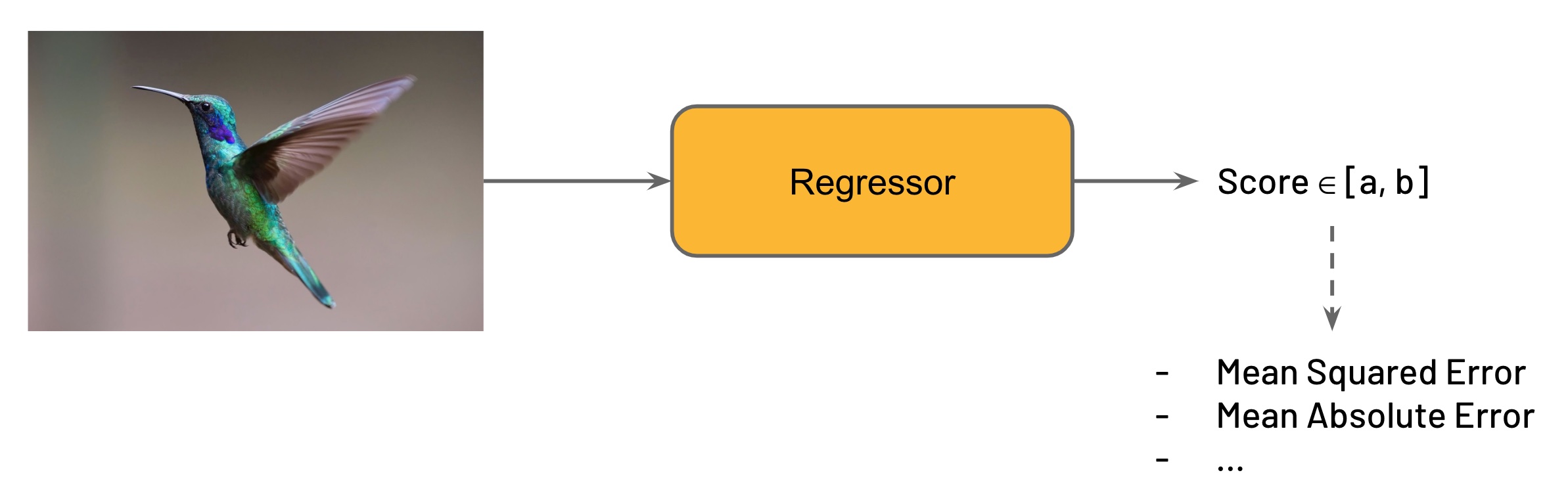

Regression problem

Talk about the regression problem, an image can be graded aesthetically in the form of a real number. Common metrics used for this problem include mean squared error (MSE), mean absolute error (MAE), etc.

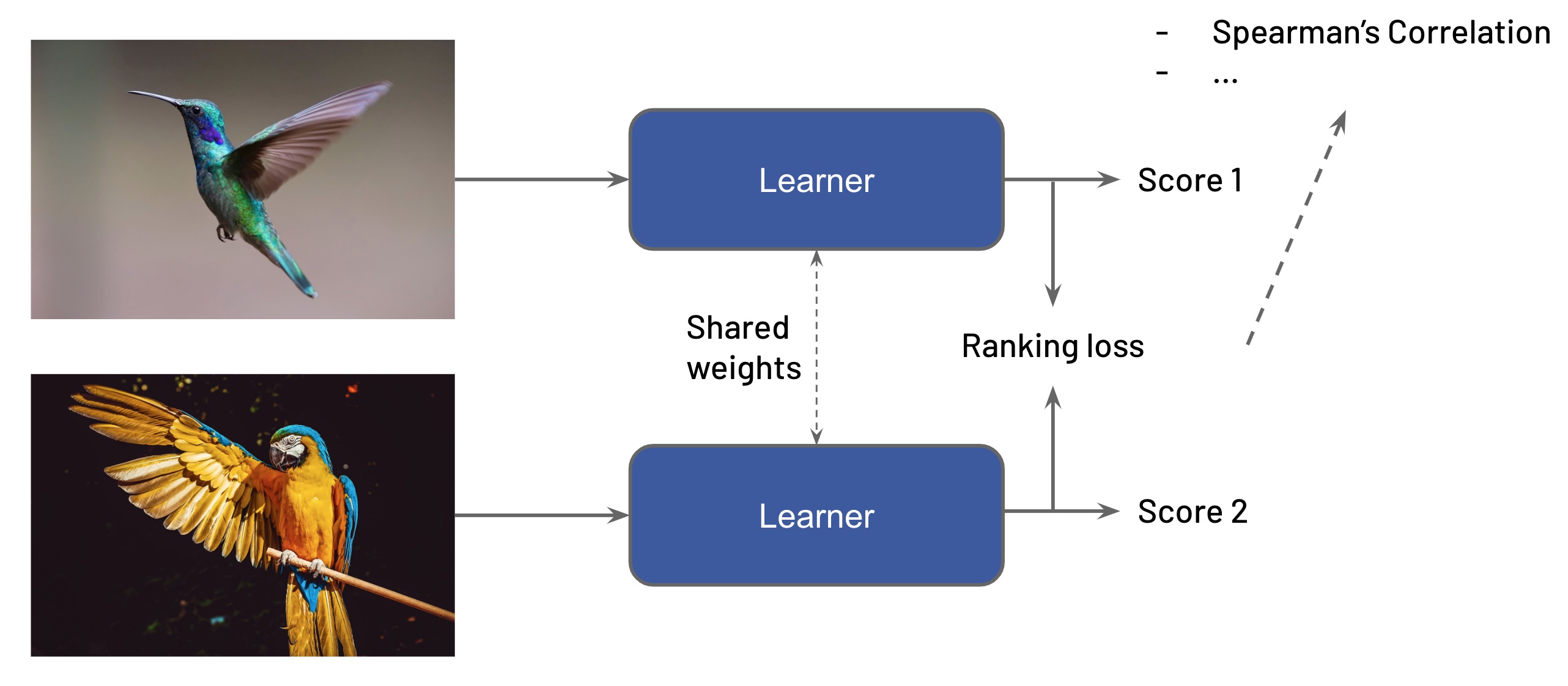

Ranking problem

For the ranking problem, the popular architecture is Siamese network (architecture of two networks with shared weights) [13] combined with a loss function for the ranking problem. In addition, we also have Triplet Network [14] (architecture of three networks with shared weights) combined with Triplet Loss [14]. Spearman’s rank correlation coefficient is the commonly used measurement in this problem.

Prediction explanation problem

Moreover, there is a group of works focused on explaining the aesthetic decisions made by machine learning models. For solutions based on convolutional neural networks, techniques such as CAM [15], GradCAM [16] are used. The problem of explaining the aesthetic decisions can also exist in the form of a multi-task learning problem to evaluate the aesthetic level of an image on multiple aesthetic criteria simultaneously [17], or in the form of an image captioning problem to generate text fragments used to explain aesthetic decisions made by the solution [18].

Scope

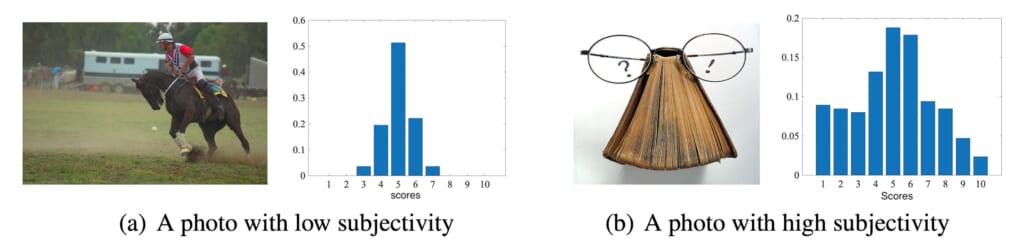

As mentioned in the above sections, the process of assessing the image aesthetics of humans always holds both objective and subjective factors.

The usual practice to prepare labels for the IAA problem is to score each image multiple times with the participation of numerous individuals. In this way, the aesthetics of an image will be represented by a distribution of scores.

Considering the scope of the IAA problem, there are two approaches.

Generic approach

Works following this approach consider aesthetics as an inherent attribute of photos, independent of any individual’s eyes. Therefore, for each input image, there will be only one corresponding output that reflects the aesthetic of that image. To obtain data for model training, the simplest way is to use the expected value, or simply the mean, calculated from the distribution of the aesthetic score of each image.

Some works design their solutions that even directly learn the aesthetic score distributions instead of just learning the expected values. Calculating the error between the model’s prediction results and the labels will require tools to compute the distance between the probability distributions, such as Earth mover’s distance (EMD), Chi-square distance, KL divergence, etc. Typical for this design, we can mention NIMA [19]. Although it is not the first work to use EMD to learn aesthetic score distribution, NIMA is still the most well-known name. The implementation of this work is available on different popular deep learning frameworks such as Tensorflow, Keras, PyTorch.

Personalized approach

Besides the generic approach to the IAA problem, there are works that deal with this problem in a personalized manner. The common approach of these works is to start from a generic model mentioned in the above section, thereby using the aesthetic labeled of each individual to train a dedicated model for that person. Sometimes, it is quite expensive to collect these labeled data, even impossible. To get around this problem, some works suggest utilizing other sources of personal data such as social network usage behavior.

So, with three aspects we have just walked through, we have been introduced to a big picture of the IAA problem, about the approaches researchers used to deal with this complex problem.

Discussion

At the end of the blog post, I would like to share some of my thoughts when embarking on researching this topic.

For me, the most difficult part when studying the problem of IAA is how to clearly define the criteria for aesthetic assessment; here, I only mention the criteria that are considered to be technically assessable. Sometimes, the definition of an aesthetic criterion also determines whether this criterion can be assessed objectively or not. The more clearly defined the criteria, the easier it is for humans or even machines to judge.

Until now, I still think, whether the solutions using deep learning for solving the problem of assessing image aesthetics, even though they achieve the so-called “impressive” numbers, even though they claim to be better than ones proposed in previous works, actually learned something, or simply OVERFITTING on the test set of a public dataset. Since deep learning models are used, there is no need to care much about how the model is learning, and what is being learned; just providing input in the right format and we will receive the output eventually.

Personally, I think it will take a long more time before researchers can understand the IAA problem as they know about image classification, object detection, etc., to get to the stage where machines surpass human capabilities, to the point where just a few percent more of a metric can determine a SOTA model. Hopefully, we will see breakthroughs in the next 5–10 years, in image aesthetics understanding and IAA problem-solving.

All opinions I give here are purely based on my knowledge about IAA problem with subjective inferences; therefore, you can catch some inaccurate statements. Still waiting for comments from the community to have a deeper understanding of this interesting topic.

See ya in the next blog post!

References

[1] Cambridge Dictionary

[2] Merriam-Webster Dictionary

[3] Advances and Challenges in Computational Image Aesthetics

[4] A Survey on Image Aesthetic Assessment

[5] Visual Categorization with Bags of Keypoints

[6] Exploiting Generative Models in Discriminative Classifiers

[7] ImageNet Classification with Deep Convolutional Neural Networks

[8] Very Deep Convolutional Networks for Large-Scale Image Recognition

[9] MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

[10] MobileNetV2: Inverted Residuals and Linear Bottlenecks

[11] Searching for MobileNetV3

[12] Deep Residual Learning for Image Recognition

[13] Learning a Similarity Metric Discriminatively, with Application to Face Verification

[14] Deep metric learning using Triplet network

[15] Learning Deep Features for Discriminative Localization

[16] Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization

[17] Photo Aesthetics Ranking Network with Attributes and Content Adaptation

[18] Aesthetic Image Captioning From Weakly-Labelled Photographs

[19] NIMA: Neural Image Assessment

Sources of images used in this blog post

- https://xframe.io/photos/22262

- https://madhansart.com/emphasis-in-art/

- https://www.flickr.com/photos/120139997@N08/14533502625/

- https://www.flickr.com/photos/timw1/49061321922/

- https://www.freepik.com/free-vector/memphis-style-pattern-design_1065872.htm

- https://www.pexels.com/photo/nature-bird-red-winter-46166/

- https://www.pexels.com/photo/white-and-gray-bird-on-the-bag-of-brown-and-black-pig-swimming-on-the-beach-during-daytime-66258/

- https://www.pexels.com/photo/grey-bird-perched-on-a-tree-branch-2662434/

- https://www.pexels.com/photo/photo-of-yellow-and-blue-macaw-with-one-wing-open-perched-on-a-wooden-stick-2317904/

- https://www.pexels.com/photo/macro-photography-of-colorful-hummingbird-349758/

Writter: Phung Trong Hieu – AI Engineer at Pixta Vietnam

Cre: Image Aesthetics Assessment with AI

Like & follow fanpage of Pixta Việt Nam to keep update with useful technology information!